In order to test the behavior of a cassandra cluster during the normal operations (global performance on bare metal servers, node maintenance impact, rebalancing, ...), we should run some tests on grid5000 infrastructure

The POC will be separated in several phases:

- Prepare scripts to build the environment and run small iterations to validate it will be possible to run the tests with interruptions

- Validate the way the data will be kept between 2 cluster restarts

- Have generic scripts that could configure the cluster according different hardware (memory / cpu / SSD, SATA or mixed / number of nodes / ...)

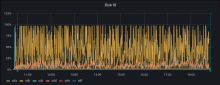

- Add a monitoring stack to measure the cluster behavior

- Import a big enough dataset to be representative of the reality (probably during the night or a week-end)

- define the minimal target to reach to consider the dataset representative

- perform some benchmarks, check the behavior and the performance impacts during normal operations

-

compare ScyllaDb / vanilla cassandra performances -

authentication to allow r/o access only -

[option] test backfilling an empty journal from cassandraThe backfill is based on sql queries and highly coupled with postgresql

The final goal of the experiment is to :

- define the minimal cluster size to maintain correct performance during maintenance operations / node failures => 5 nodes is recommended to avoid too much pressure on the remaining nodes in case of an incident with only 3 nodes (run + recovery)

- possibly test the performance on the different hardwares provided by grid5000