Add the possibility to configure the consistency level for read and write requests

Description

Revisions and Commits

| Status | Assigned | Task | ||

|---|---|---|---|---|

| Migrated | gitlab-migration | T2213 Storage | ||

| Migrated | gitlab-migration | T2214 Scale-out graph and database storage in production | ||

| Migrated | gitlab-migration | T1892 Cassandra as a storage backend | ||

| Migrated | gitlab-migration | T3357 Perform some tests of the cassandra storage on Grid5000 | ||

| Migrated | gitlab-migration | T3396 cassandra - allow to configure the consistency level used by the queries |

Event Timeline

@vlorentz If you have an idea on how to implement that, I take it ;), I'm not sure if I have not missed something

I'm not quite sure what this is about.

If you want to change the consistency level for all queries, then you'll need to read the config from CqlRunner.__init__ instead of using the hardcoded _execution_profiles constant.

If you want to change it query-per-query, you should define a bunch of execution profiles with Cluster.add_execution_profile , and use them in _execute_with_retries using the execution_profile argument of Session.execute.

As to selecting which profile to use, we will probably need to pass an argument to _execute_with_retries so public methods can tell it which profile to use.

And either way, you'll probably want to make the replication policy defined in create_keyspace by passing a value instead of the hardcoded 1.

IMO, we should first try to have a global configuration for all the read/write queries, and improve that later if needed for performance or if it creates some problems. At worst, it will be possible to use the default ONE values by configuration.

For the record to add more details , by using the default consistency level ONE:

- at cluster level, it's more subject to data inconsistency as only one confirmation of the write is needed (it should be eventually consistent as it's a promise of cassandra, but not after a long repair process)

- at the replayer level, for example, for origin_visit, a visit is received but the origin is not yet created in the database, the origin is inserted in the replayer and read in the origin_visit_add method. There is no guaranty that an up-to-date value is returned unless read and write QUORUM(_LOCAL) consistency is used.

Also some links to good articles about the consistency level impacts:

only one confirmation of the write is needed

It's not perfect though. If the server that confirmed the writes breaks before it replicates the write, then the write is lost.

If I'm not wrong, it seems there is the coordinator node between the client and the replicas to handle such cases.

What is not clear, is what will happen if the coordinator is one of the replicas and dies in the middle of the write, I suppose it will depends when it dies exactly.

Still for the record, some docs explainig how the writes are performed: https://cassandra.apache.org/doc/latest/operating/hints.html or more visual here: https://docs.datastax.com/en/cassandra-oss/3.0/cassandra/dml/dmlClientRequestsWrite.html

One example of the wrong behavior of the read consistencty level ONE for read requests:

One of the probe of the monitoring query is based on the objectcount table content.

The servers were hard stopped after the end of the grid5000 resrvation and it seems some replication messages were lost. After the restart the content of the table in not in sync on all the servers.

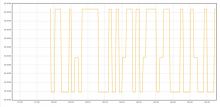

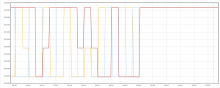

The object count is not the same depending of the server that respond the first to the query:

After specifying a local_quorum consistency, the values are stable (after 08:58)

The version was used during the last tests on grid5000. The consistency level was correctly configured