- Queries

- All Stories

- Search

- Advanced Search

- Transactions

- Transaction Logs

Advanced Search

May 4 2021

new permissions updated by puppet

May 3 2021

fix a typo on the commit message

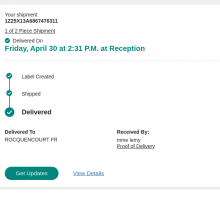

The command seems to be delivered, I will check with the DSI how we can proceed for the installation

Apr 23 2021

logstash now exposes an api server[1] which seems to return some interesting metrics on the plugin behaviors.

For example, there is a section for the elasticsearch output plugin:

"outputs": [

{

"id": "62d11c4234b8981da77a97955da92ac9de92b9a6dcd4582f407face31fd5c664",

"events": {

"duration_in_millis": 160089636,

"in": 72818126,

"out": 72818046

},

"bulk_requests": {

"responses": {

"200": 3860888

},

"successes": 3860888

},

"documents": {

"successes": 72818046

},

"name": "elasticsearch"

}

]

},I'll try to implement a small python script checking if there is other response code than 200 in a first time to identify the behavior

Perhaps it will be also interesting to check other properties like queue size :

"queue": {

"type": "memory",

"events_count": 0,

"queue_size_in_bytes": 0,

"max_queue_size_in_bytes": 0

},I checked the icinga_logstash plugin[1] to see if it can be helpful but it's more oriented to logastash instances used to ingest data from log files. There is no options to check the number of events received/sent for example.

According to the tracking page, the command has left the factory the Apr 22, 2021, The ETA is May 28, 2021*.

and the authors are now displayed on staging and production (webapp1)

The lag for the production can be followed here: https://grafana.softwareheritage.org/goto/Di2H3z9Gk

(staging has already recovered)

the swh-counters is deployed in production too:

- upgrade swh-counters package and restart swh-counters backend and journal

root@counters1:~# apt dist-upgrade ... Setting up python3-swh.counters (0.7.0-1~swh1~bpo10+1) ... root@counters1:~# systemctl stop swh-counters-journal-client.service root@counters1:~# systemctl restart gunicorn-swh-counters.service root@counters1:~# systemctl start swh-counters-journal-client.service root@counters1:~# redis-cli pfcount person (integer) 7

The count of the person already starts

- stopping the journal-client to be able to reset the releases and revisions offsets

root@counters1:~# systemctl stop swh-counters-journal-client.service

- reset the offsets

vsellier@kafka1 ~ % /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --all-topics --to-current --dry-run --export --group swh.counters.journal_client 2>&1 > ~/counters_journal_client_offsets.csv # revision reset vsellier@kafka1 ~ % /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --group swh.counters.journal_client --to-earliest --execute --topic swh.journal.objects.revision # release reset vsellier@kafka1 ~ % /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --group swh.counters.journal_client --to-earliest --execute --topic swh.journal.objects.release # checks /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --all-topics --to-current --dry-run --export --group swh.counters.journal_client 2>&1 > ~/counters_journal_client_offsets-backfill.csv vsellier@kafka1 ~ % diff ~/counters_journal_client_offsets.csv ~/counters_journal_client_offsets-backfill.csv | less 1c1 < "swh.journal.objects.revision",25,8275180 --- > "swh.journal.objects.revision",25,0 8c8 < "swh.journal.objects.release",128,78484 --- > "swh.journal.objects.release",128,0 16c16 ...

- journal client restarted

root@counters1:~# systemctl start swh-counters-journal-client.service

- the person counters is growing fastly

root@counters1:~# date;redis-cli pfcount person Fri 23 Apr 2021 10:55:54 AM UTC (integer) 72358 root@counters1:~# date;redis-cli pfcount person Fri 23 Apr 2021 10:55:57 AM UTC (integer) 80618

- just keep the topic configuration as the journal split is not needed anymore

- fix the type in the commit message

I hesitated to do it, but as it should not move anymore now everything is reactivated, I choose to keep it as it.

We'll see if there are mouvements on this list in the near future

- version 0.7.0 release with the last improvement (D5576) of vlorentz (thanks)

- deployment done in staging

- the person counting has started on the live messages:

root@counters0:~# redis-cli 127.0.0.1:6379> pfcount person (integer) 7

- now let reset the consumer offsets for the release and revision topics to backfill the person counter:

# offsets backup /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --all-topics --to-current --dry-run --export --group swh.counters.journal_client 2>&1 > ~/counters_journal_client_offsets.csv # revision reset /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --group swh.counters.journal_client --to-earliest --execute --topic swh.journal.objects.revision # release reset /opt/kafka/bin/kafka-consumer-groups.sh --bootstrap-server $SERVER --reset-offsets --group swh.counters.journal_client --to-earliest --execute --topic swh.journal.objects.release

the vm is configured and the new database schema on the staging database created.

psql is also configured with several services:

- swh-mirror : read-only connection on the swh-mirror schema

- admin-swh-mirror: r/w connection

- swh: read-only connection on the archive database (staging)

In D5576#141670, @vlorentz wrote:In D5576#141650, @vsellier wrote:I suppose the message.value() is returning a copy of the content

(Py_INCREF only increments the reference counter)

limit the pre-configured databases to the main database + swh-mirror

Apr 22 2021

I suppose this implementation will be less effective in term of memory consumption as we will keep a copy of the message contents on the dict (I suppose the message.value() is returning a copy of the content)

It also makes the module aware of how the objects are serialized on kafka, which looks quite low level.

VM created by terraform :

mirror-tests_summary = hostname: mirror-test fqdn: mirror-test.internal.staging.swh.network network: ip=192.168.130.160/24,gw=192.168.130.1 macaddrs=E6:3C:8A:B7:26:5D

VM declared on the inventory : https://inventory.internal.softwareheritage.org/virtualization/virtual-machines/103/

Future ip will be 192.168.130.160

thanks for the diff you will propose. I will land this one in the interval.

It's for performance considerations only, for most of the counters, counting the keys is enough as it's the unique identifier in kafka.

The KeyOrientedJournalClient[1] is bypassing the object deserialization when a message is received, so a more classical client is needed for this specific Person case.

add missing doc strings

Update according the reviews' feedbacks

Apr 21 2021

puppet ressources cleaned:

root@pergamon:~# /usr/local/sbin/swh-puppet-master-decomission clearly-defined.internal.staging.swh.network + puppet node deactivate clearly-defined.internal.staging.swh.network Submitted 'deactivate node' for clearly-defined.internal.staging.swh.network with UUID 26eb9a73-add9-4745-b068-6106ab2b20b4 + puppet node clean clearly-defined.internal.staging.swh.network Notice: Revoked certificate with serial 256 Notice: Removing file Puppet::SSL::Certificate clearly-defined.internal.staging.swh.network at '/var/lib/puppet/ssl/ca/signed/clearly-defined.internal.staging.swh.network.pem' clearly-defined.internal.staging.swh.network + puppet cert clean clearly-defined.internal.staging.swh.network Warning: `puppet cert` is deprecated and will be removed in a future release. (location: /usr/lib/ruby/vendor_ruby/puppet/application.rb:370:in `run') Notice: Revoked certificate with serial 256 + systemctl restart apache2

- vm destroyed

- configuration removed for terraform

- database schemas cleared:

- before:

root@db1:~# zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT data 27.3T 623G 26.7T - - 16% 2% 1.00x ONLINE -

lgtm

Apr 20 2021

The 2 disks were removed from the server and packaged to be sent to seagate.

The order was received and confirmed by dell ETA: 28th may

The detail was sent on the sysadm mailing list