Considering it looks like there will be a lot of new indexed object, we must be sure the chosen solution will be able to scale up in the future

Possible identified options:

- Deploy a new elasticsearch instance on esnode* [1]

- Build a new cluster with new bare metal servers [2]

- extends current cluster storage [3]

[1] Some drawbacks:

- there is 32Go of memory on the servers, 16G are allocated to the current elasticsearches, in the short term, we can use 8go per instance but we must be sure the log cluster is functional with only 8go

- Possible solution: Increase memory (4 memory slots are still available on the servers)

- the current cluster use 3To per node for a total of 7To, which could be too small to keep the replication factor in case of a failure of one node. There is no more slot available to add new disks, the possible solutions:

- reducing the retention delay of the logs (estimated gain 2To)

- Replace disk by bigger ones

[2] 3 new servers 1U 4x2.4To (4.8Toeffective) 32Go memory cost: ~8500e (possible to have up to 8 disks)

[3] The storage is on the ceph cluster can be increased as there are several free disk slots on beaubourg/hypervisor3/branly

Initial discussion on irc:

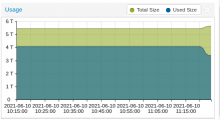

11:25 <+vsellier> we will have to check the elasticsearch behavior with production volume 11:26 <+vsellier> the size increases have been important on staging 11:26 <+vsellier> around x5 11:26 <+vsellier> (the index size) 11:27 <+vsellier> if the ratio is the same for production, the bump could be from 250g to 1.2T 11:28 <+vsellier> the search-esnode are not yet sized for this 11:31 <vlorentz> shouldn't we stop the metadata ingestion until they are? 11:31 <+vsellier> (they are vms on proxmox, we will have to think where we can find so much space) 11:32 <+vsellier> the search journal client is not deployed so no problem for the moment, nothing is sent to ES 11:33 <+vsellier> (I mean not deployed in production, it is only on staging)