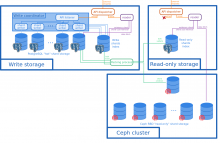

Assuming there exist an object storage as described in T3054, there needs to be an object storage backend to store / retrieve objects. The name is winery (using the "winery namespace" agreed on on the mailing list last week). The implementation is a replacement of the object storage at the bottom of the global architecture.

The backend runs in a *sgi app. At boot time it:

- Gets an exclusive lock on a Shard in the Write Storage (creating a new one or resuming an existing one)

- Gets a connection to the Write Storage and the global index

- Registers for throttling purposes (probably a table in the database)

- Serves both reads and writes from and to the Read Storage and the Write Storage

When the Shard it is responsible for in the Write Storage is full:

- It stops accepting requests

- Writes the Shard to the Read Storage

- Shutdown

Spawning the *sgi app or controlling the number of workers is not in the scope of this implementation.