Currently, the network management of the gateways is done manually with some iptables rules and custom route management.

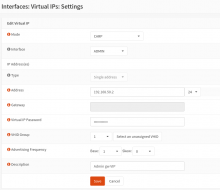

Having a software router can help to centralize the rules and the network configuration like the VPNs and simplify the configuration.

As PFSense is a well-known solution on the network management community, the test will initially target it to check if it can match our needs.

list of tasks copied from the first comment

- partially done (ping issue) Testing if having a interface on the VLAN1300 is working as the hypervisor should be well configuration

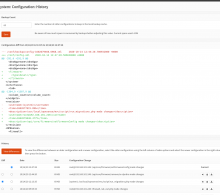

- Testing the HA possibilities [1]

- Testing configuration traceability [2] The plugin is not yet available on the current version

- VPN [4]

- Test ipsec vpn / azure compatibility

- Test OpenVPN and certificate management

- Test the monitoring capabilities / prometheus integration (via an snmp exporter[5] or netflow (there is a lot of resources on internet relative to prometheus / grafana integration[6]))