Related Objects

Event Timeline

This task made good progress today. I spent a small while perusing our logging to understand the margins for performance.

I use the uwsgi logs from uffizi and the celery logs from prado / softwareheritage-log.

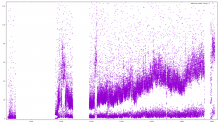

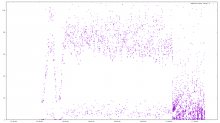

Sample results (showing time vs. "successful import time"):

During this tryout, a few things have been noticed:

- rDLDG1f100e: names were sent as unicode objects instead of bytes, breaking COPY in subtle ways (as well as preventing the import of non-utf-8 encoded files). A few dozen repos failed to import thanks to that.

- The _name attribute depends on a yet-unmerged PR in pygit2. This version has been packaged and deployed on our workers and is available for sid on our repo too.

- rDSTO07c8f444 got rid of our biggest bottleneck : the swh_foo_missing functions that were using except did a full seq scan of the except-ed table...

- Guess when the fix was deployed...

We should be able to get some meaningful data by the beginning of next week. But it seems that the latest results are way promising !

(Thanks for making me play for the first time with a IPython notebook, it's a pretty impressive environment to play with scientific data.)

Based on that data, here are the current average/stddev processing times per repository based on the first ~14k random repositories loaded (~1% of our total):

- average: 14.59 s

- stddev: 254.6 s

Projecting to 14M repositories, we obtain a total processing time of ~74 days.

(Threats to validity: 1% is still a small sample, stddev is pretty high.)

Here's a slightly modified version of the above IPython notebook:

, with average/stddev/eta computations.Right :)

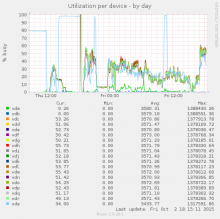

So, for the records, here're the stats looking back 48 hours from now, spanning 221k repositories, i.e., about ~1.6% of 14M repositories:

- average: 19.8 s

- stddev: 323 s

- ETA: 100 days

As just discussed on IRC, it'd be interesting to monitor the moving average, to see if there are relevant tendencies there.