- ✅ Ask for special permission to use the cluster during 9 days

- ✅ reserve 30 machine for the Read Storage + 3 machines for the Write Storage for 216 hours starting August 6th 7pm

- ✅ August 6th 7pm, install the latest version of the benchmark software T3149 and prepare the run

- ✅ Populate the global index with 10 billions entries

- ✅ August 7th 7pm, run the benchmark with 40TB

- ✅ August 16th, analyze the results.

Description

| Status | Assigned | Task | ||

|---|---|---|---|---|

| Migrated | gitlab-migration | T3116 Roll out at least one operational mirror | ||

| Migrated | gitlab-migration | T3054 Scale out object storage design | ||

| Migrated | gitlab-migration | T3422 Running the benchmarks: August 6th, 2021, 9 days |

Event Timeline

Special permission request sent:

Bonjour,

Account: https://api.grid5000.fr/stable/users/ user ldachary

Laboratory: Software Heritage Special Task Force Unit Detached

Project: Software HeritageIn the past months a novel object storage architecture was designed[0] and experimented on using the grid5000 grenoble cluster[1]. It allows for the efficient storage of 100 billions immutable small objects (median size of 4KB). It will be used by the Software Heritage project to keep accumulating the publicly available source code that is constantly growing. Software Heritage already published articles[2][3] and more are expected in the future. Their work would not be possible without this novel object storage architecture because the current solutions are either not efficient enough or too costly.

Request for resources:

- Site grenoble

- 32 dahu nodes if possible, 28 minimum https://www.grid5000.fr/w/Grenoble:Hardware#dahu

- 4 yeti nodes if possible, 2 minimum https://www.grid5000.fr/w/Grenoble:Hardware#yeti

- Date of the reservation: August 6th or 14th or 21st, 7pm

- Duration: 9 days

The goal is to run a benchmark demonstrating the object storage architecture delivers the expected results in an experimental environment at scale. Running them over the week-end (60 hours) shows they behave as expected but they do not exhaust the resources of the cluster (using only 20% of the disk capacity). Running the benchmark during 9 days would allow to use approximately 100TB of storage instead of 20TB. It is still only a fraction of the target volume (10PB) but it may reveal issues that could not be observed on a smaller scale.

Cheers

[0] https://wiki.softwareheritage.org/wiki/A_practical_approach_to_efficiently_store_100_billions_small_objects_in_Ceph

[1] https://forge.softwareheritage.org/T3149

[2] https://www.softwareheritage.org/wp-content/uploads/2021/03/ieee-sw-gender-swh.pdf

[3] https://hal.archives-ouvertes.fr/hal-02543794

Received yesterday:

Hello Loïc,

Your request is approved.

You can reserve 30 dahu and 3 yeti nodes from August 6th for 9 days (we

would like to keep at least one node available from each cluster).Have a nice weekend,

$ oarsub -t exotic -l "{cluster='dahu'}/host=30+{cluster='yeti'}/host=3,walltime=216" --reservation '2021-08-06 19:00:00' -t deploy [ADMISSION RULE] Include exotic resources in the set of reservable resources (this does NOT exclude non-exotic resources). [ADMISSION RULE] Error: Walltime too big for this job, it is limited to 168 hours

The usual grid5000 contact is on vacation, falling back to his replacement to resolve this.

Mail sent today:

Hi Simon,

I was about to make the reservation and ran into the following problem:

$ oarsub -t exotic -l "{cluster='dahu'}/host=30+{cluster='yeti'}/host=3,walltime=216" --reservation '2021-08-06 19:00:00' -t deploy

[ADMISSION RULE] Include exotic resources in the set of reservable resources (this does NOT exclude non-exotic resources).

[ADMISSION RULE] Error: Walltime too big for this job, it is limited to 168 hoursWould you be so kind as to let me know how I can work around it? In the meantime I reserved for 163 hours (job 2019935) just to make sure the time slot is not inadvertently occupied by another request.

Thanks again for your help and have a wonderful day!

Reply:

On a une procédure pour ce genre de cas, je t'ai ajouté au groupe

"oar-unrestricted-adv-reservations" qui devrait lever toutes les

restrictions sur les réservations à l'avance de ressources. Tu devrais du

coup pouvoir refaire ta réservation avec le bon walltime.J'ai mis une date d'expiration au 12 septembre sur ce groupe pour être sûr

que ça suffise, mais pense bien à refaire une demande d'utilisation

spéciale si tu as un nouveau besoin hors charte après celle d'août.

$ oarsub -t exotic -l "{cluster='dahu'}/host=30+{cluster='yeti'}/host=3,walltime=216" --reservation '2021-08-06 19:00:00' -t deploy [ADMISSION RULE] Include exotic resources in the set of reservable resources (this does NOT exclude non-exotic resources). [ADMISSION RULE] ldachary is granted the privilege to do unlimited reservations [ADMISSION RULE] Computed global resource filter: -p "(deploy = 'YES') AND maintenance = 'NO'" [ADMISSION_RULE] Computed resource request: -l {"(cluster='dahu') AND type = 'default'"}/host=30+{"(cluster='yeti') AND type = 'default'"}/host=3 Generate a job key... OAR_JOB_ID=2019986 Reservation mode: waiting validation... Reservation valid --> OK

Rehearse the run and make minor updates to make sure it runs right away this friday.

https://git.easter-eggs.org/biceps/biceps/-/tree/18c2bad480da19bd468c4be8b4bffa610ec6f88d

https://intranet.grid5000.fr/oar/Grenoble/monika.cgi?job=2025313

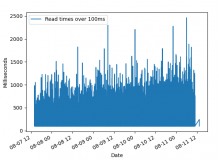

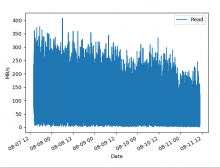

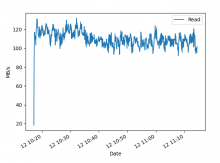

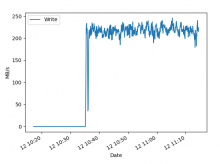

The run terminated August 11th @ 15:21 because of what appears to be a rare race condition. It was however mostly finished. The results show an unexpected degradation in the read performances. It deserves further investigation because it keeps degrading over time. The write performance are however stable and suggest the benchmark code itself may be responsible for this degradation. If the Ceph cluster was globally slowing down, both reads and writes would show a degradation in performance because previous benchmark results showed that there is a correlation between the two.

Bytes write 106.4 MB/s Objects write 5.2 Kobject/s Bytes read 94.6 MB/s Objects read 23.1 Kobject/s 1014323 random reads take longer than 100ms (2.1987787007491675%)

https://git.easter-eggs.org/biceps/biceps/-/tree/4e998f180f1cc4ca00acefb552220b3992bd7a25

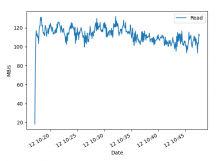

The benchmarks were modified to (i) use a fixed number of random / sequential readers instead of a random choice for better predictability, (ii) introduce throttling to cap the sequential reads speed to approximately 200MB/s. A run of read only was run:

- ansible-playbook -i inventory tests-run.yml && ssh -t $runner direnv exec bench python bench/bench.py --reader-io 500 --rw-workers 0 --rand-ratio 5 --file-count-ro 0 --ro-workers 20 --file-size $((1 * 1024))

and at the same time rbd bench was run to continuously write on a single image, at ~200MB/s. The start of the rbd bench is a few minutes after the start of the read. It will run for the next 24h to verify that:

- write speed is stable

- read speed is stable

- slow reads improved and stay under 2%

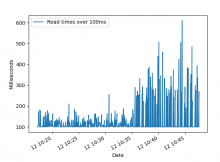

The number of slow random reads reaches ~3.5% presumably because there is too much write pressure (the throttling of writes was removed).

stats.csv 100% 89KB 509.8KB/s 00:00 too_long.csv 100% 380KB 2.0MB/s 00:00 Bytes write 0 B/s Objects write 0 object/s Bytes read 105.1 MB/s Objects read 25.7 Kobject/s 16766 random reads take longer than 100ms (3.4325045859538785%)

Throttling writes to 120MBs to reduce the pressure:

- ceph config set client rbd_qos_write_bps_limit $((120 * 1024 * 1024))

After 20 minutes or so:

Bytes write 0 B/s Objects write 0 object/s Bytes read 105.2 MB/s Objects read 25.7 Kobject/s 26512 random reads take longer than 100ms (3.508214769647697%)