Given the benchmark results T3149, what hardware architecture could support the object storage design? (see also T3054 for more context).

Here is a high level description of the minimal hardware setup.

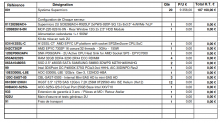

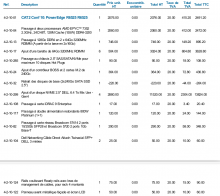

Network

- 10Gb 16 port switch

Write Storage

If the failure domain is the host, there must be two of each. If the failure domain is the disk, additional disks must be added for RAID5 or RAID6.

- 1 Global Index: disks == 4TB nvme, nproc == 48, ram == 128GB, network == 10Gb

- 1 Write ingestion: disks == 6TB nvme, nproc == 64, ram == 256GB, network == 10Gb

The size of the global index uses 125 bytes per entry once ingested in PostgreSQL. Each entry is 32 bytes for the cryptographic signature + 8 bytes for identifier of the shard in which the corresponding object can be found. And there is a unique index created on the cryptographic signature.

Read Storage

- 3 monitor/orchestrator: disks == 500GB ssd + 4TB storage, nproc == 8, ram == 32GB, network == 10Gb

- 7 osd: disks == 500GB ssd + 10 x 8TB/12TB, nproc == 16, ram == 128GB, network == 10Gb

Clients

Each is running up to 20 daemons servicing client requests for the Read Storage and the Write Storage.

- 2 daemons: disks == 500GB ssd + 4TB storage, nproc == 24, ram == 32GB, network == 10Gb

See also https://www.supermicro.com/en/solutions/red-hat-ceph