If we choose to go to kubernetes, we will probably need to to manage several clusters to isolate the concerns.

For example, we will need at least a production and staging cluster + some sandbox for the developers and tests.

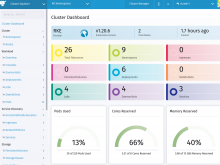

Rancher is supposed to provide a easy way to centralize the cluster management but it need to be tested to see what it's possible.

The firt step is to deploy a basic installation and ply with it but the goal is reply to the following points :

- pro/cons compared to a manual installation

- automation capabilities (are there any integration with terraform or puppet ?)

- operation cost (cost of an upgrade ?)

- ...